The Moat Is Shifting: From Model Size to Signal Quality in Enterprise AI

Why this shift matters now

Most enterprises are learning that scaling AI is far easier to say than do. As the MIT 2025 State of AI in Business pointed out, 95% of generative AI pilots never make it to production. And that’s not a failure of models, it’s a failure of data. With any technology as transformative as AI, adoption comes in waves. Each wave reveals the real innovators while washing away the hype built on weak data foundations.

AI doesn’t run on hope or headlines, it runs on signal quality.

And “AI-ready” data, according to Modern Data 101, means 99%+ accuracy and consistency, a far cry from the 60–70% quality most legacy systems operate on. That delta is the moat.

This is why the real competition in AI isn’t in LLM parameter counts; it’s in who can capture and operationalize external context — events, weather, mobility, sentiment, and supply shifts — that explain the 90% + of demand variability that models can’t see.

Proof points: The edge is in the signal

Take ZestyAI, for example. In a category once dominated by actuarial tables and generic risk scores, they’ve built a competitive moat by fusing internal property data with external real-world signals such as; satellite and aerial imagery, climate models, and vegetation data, to predict wildfire and storm risk at the level of a single home. The breakthrough wasn’t a bigger model; it was richer signal context. By combining what insurers already know (building materials, policy history) with what’s changing outside their walls (weather, tree cover, regional hazard data), ZestyAI delivers risk assessments traditional models could never see. It’s a clear illustration of where the real edge in AI now lies — in connecting inside data to outside reality.

Similarly, Signal AI has built a platform that turns 75 languages of global media coverage into structured insights for enterprise risk and reputation teams. Their moat isn’t in model novelty. It’s in the proprietary data pipeline — thousands of unique, normalized external signals that no competitor can replicate overnight.

And if you zoom out, the financial sector has known this for decades. Hedge funds using alternative data like shipping logs, satellite imagery, event schedules, outperform those relying purely on price and fundamentals. The logic is universal: the firm with the best signal wins, not the biggest compute budget.

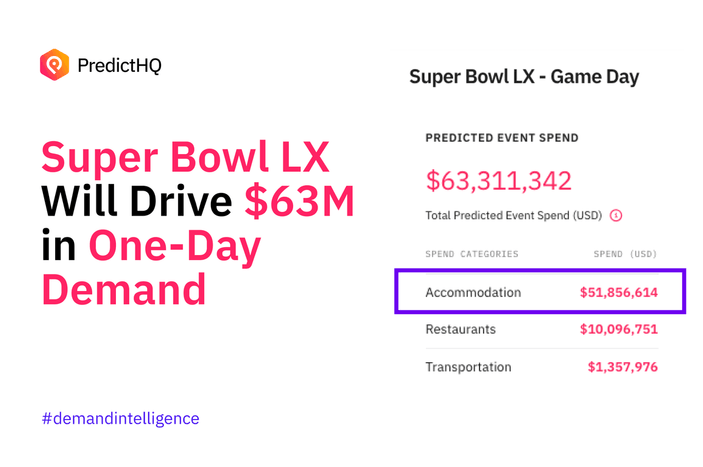

PredictHQ operates in that same philosophy. By quantifying the real-world impact of live events from concerts and sports to severe weather and school holidays, it gives enterprise models a feed of external signals that explain much of the “unexplained variance” traditional AI misses. It’s the difference between predicting a sales spike and knowing what’s driving it.

The false comfort of “build it yourself”

Many enterprise AI teams fall into the trap of thinking data pipelines are just another engineering task. They’re not.

Building a proprietary signal layer typically takes 5–7 years and $50M+, and that’s if the right partnerships, infrastructure, and feedback loops are already in place. For most companies, it’s like trying to build your own power grid to run your new computer.

A recent HP analysis on enterprise AI made this plain: internal “build” projects stall not because of poor models, but because the data supply chain required to support them. The ingestion, normalization, labeling, and governance of signals prove too complex and expensive to maintain. Which is why, increasingly, enterprises are choosing to buy or partner for signal, not build it.

The flywheel advantage

The companies that own unique signals and can continuously feed, test, and learn from them create a compounding advantage. Each model interaction makes the signal cleaner, the predictions sharper, and the moat deeper.

It’s a flywheel that rewards quality over quantity.

The feedback loop is simple to understand, complex to build, but extremely powerful:

- Ingest proprietary signals.

- Feed into models and decision systems.

- Learn from real-world outcomes.

- Refine the signals and repeat.

Over time, this dynamic creates what economists call a durable advantage layer, something that persists even as foundational models, infrastructure, and tooling commoditize.

What this means for enterprise leaders

For anyone shaping AI strategy, the questions are shifting fast.

- Not “Which model are we using?” but “What signals are shaping its decisions?”

- Not “Do we have enough data?” but “Do we have the right data: clean, contextual, and alive?”

- Not “How do we build faster?” but “How do we keep learning from reality?”

Owning a model is a moment in time. Building a living signal ecosystem is a lasting edge. Foundational models will continue to require vast amounts of compute until innovation slows that need, but for enterprises looking to gain an edge right now, the focus should be on context. The companies that shift their mindset from scaling models to curating signals will lead the next wave, not chase it. As the noise grows louder, signal quality will separate what endures from what fades.

💡 Learn More: Empowering AI with real-world context Read now