Get Started

Contact our data science experts to find out the best solutions for your business. We'll get back to you within 1 business day.

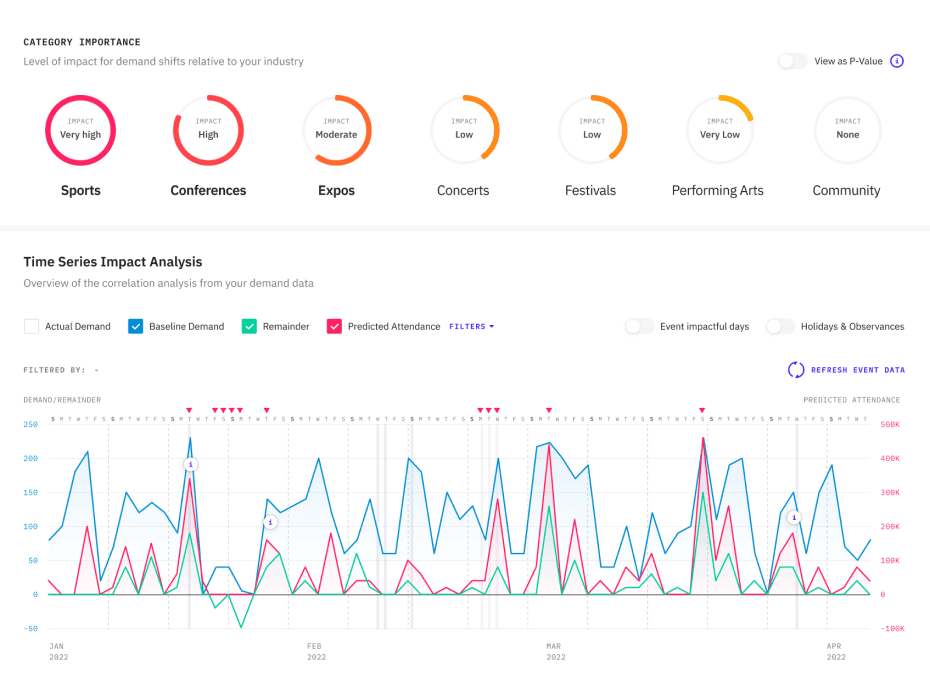

Events vary in size, impact, location, frequency and duration, and raw event data is unstandardized and unverified. This makes correlation complicated and time-consuming without PredictHQ.

Securely upload your demand data into our relevancy engine tool, Beam, to understand what drives your demand in seconds.

phq_agg_data = PredictHQ_API(type='aggregate_event_impact')

phq_aggregate_data_df = TransformToDataFrame(phq_agg_data)

model = ForecastingModel()

model.fit(your_demand_data, phq_aggregate_data_df)

future_demand = DataFrame(period=60)

forecast = model.predict(future_demand)Finding the statistical relationship between historical events and transactional demand helps businesses understand event impact. Transactional demand patterns can be complex and relevant event data can be difficult to source and process, so advanced time series modeling can take months to complete.

Beam converts unstructured, dynamic event data into a workable dataset. It then decomposes your data into a baseline and remainder and shows you the correlation with events data. We created our Demand Analysis tool, to turn months of work into minutes. Take the work out of establishing correlation so your teams can spend more time perfecting forecasting models.

Get started with step-by-step directions on where to get started with our technical documentation and start improving your demand forecasting models today.

Transforming the data is typically the most time consuming and error-prone part of the process. Extracting features from raw data to better represent the underlying problem to predictive models is critical for model accuracy. Better features, means better results.

Contact our data science experts to find out the best solutions for your business. We'll get back to you within 1 business day.